Does your IP is blacklisted when crawling or scraping with Puppeteer? Are you tired of getting blocked by recaptcha? No worry any more!

This post we will let you know, How to prevent being detected as bot on Puppeteer, and The best IPs solution to avoid getting blacklisted & blocked while scraping with Puppeteer!

Table of Contents

What is Puppeteer?

A puppeteer is a tool for web developers built by Google. The tool is a Node library with a high-level API to control the headless and non-headless browsers, chrome and chromium.

A web browser without a user interface is called a headless browser and it allows you to automate control of a web page. Automation on top of a real browser means you no longer need to run javascript, render pages or follow redirects.

This method allows for successful and accurate access to target websites that implement blocking techniques through monitoring the cookies and headers of those accessing their site.

Why use a headless browser like Puppeteer for testing or scraping?

The main benefit of using a headless browser is that you can automate your testing and scraping operations. A headless browser like Puppeteer lacks Flash Player and other types of software which provides information about the user to the target websites. By not having these parameters to worry about, and getting rid of them altogether, you can easily increase your success rate, The Puppeteer help you prevent getting blacklisted while scraping.

Puppeteer is an easy to use automation tool in comparison to other headless browsers that require more technical expertise. Created for Chrome browser, Puppeteer is used for testing and automating desktop applications by providing the ability to simulate real-user behavior. It allows for testing the user-interface of sites to ensure they behave as developers expect.

With Puppeteer there is no need to open the browser you can easily generate a screenshot of the final destination with little to no effort. Puppeteer helps you to use incognito mode providing an entirely neutral environment with no cookies, no-cache, and no device fingerprints. This means every time you open a browser its as if you opened an entirely new machine!

You may be interested in,

- E-Commerce Proxies for Data Scraping to avoid IP blocks!

- Why & How to use proxies to create social media accounts

- Guide to Using Jarvee and Luminati for Instagram automation

Why Puppeteer Proxies are needed?

Working with an automation tool like Puppeteer will allow you to code every aspect of an environment but the one thing that cannot be coded is your IP address.

There is no doubt that websites can easily detect web scraping activities based on the IP address, even normal browsing, sometimes you will be asked to proceed CAPTCHA verification for you’re detected as bot by google, isn’t it?

Proxies are needed to test your application in a different country or city. They are also a must if you require scraping multiple pages. Not only will a proxy network allow you to simulate a real-user in the location you require but it will keep you anonymous and provide the real-time, accurate data you need.

With the use of a Puppeteer proxy, you can run multiple browsers simultaneously, each from a unique IP and test performance as well as the speed of the site/application.

Why do I recommend Luminati with Puppeteer?

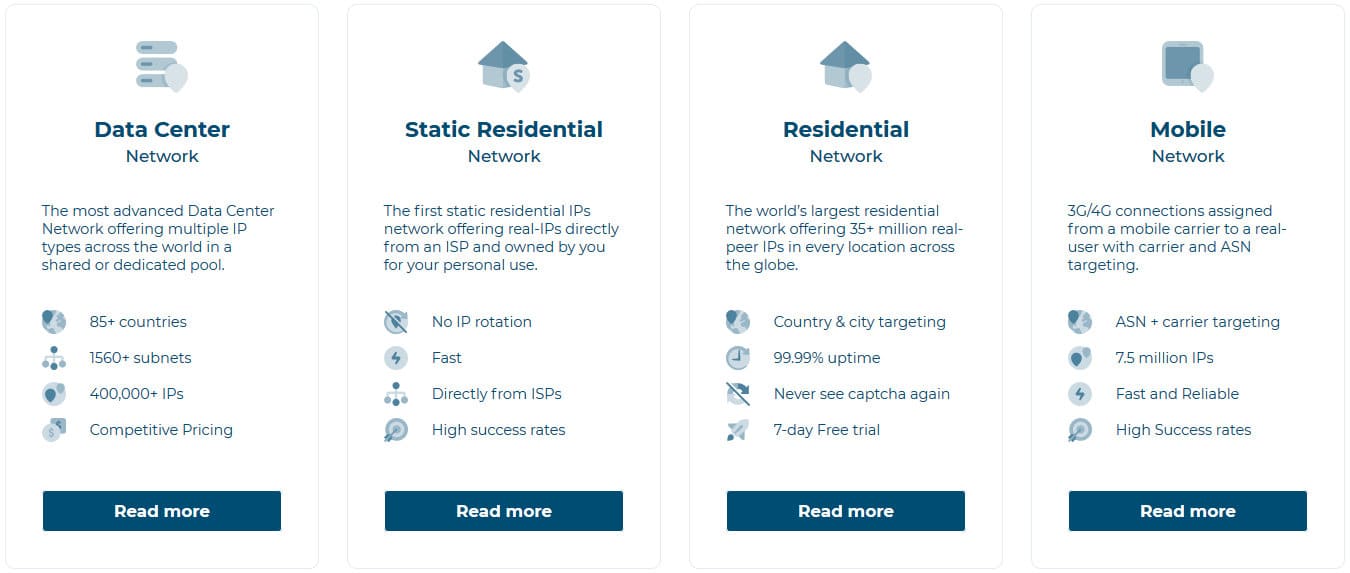

Luminati offers 4 separate networks including,

- Datacenter Network

- Rotating Residential Network

- Dedicated ISP IPs (Static Residential)

- Mobile Network

All with country and city targeting, With its many product offerings and more than 11 IP types, it has everything you need to successfully scrape and test the sites you require.

Luminati also offers a free, open-source proxy manager which allows you to control your proxies and their parameters with the ease of a simple drop-down menu.

Within the Luminati Proxy Manager, you can choose your preferred user-agent or employ random user agents on each request. The software also supports custom user agents and headers.

By automating your browser with the use of proxies you can quickly and easily test your applications, generate screenshots and ensure the user experience you desire!

Can not afford Luminati? Here are some other Other residential IP proxies providers.

How to connect Puppeteer with Luminati’s Super Proxies

- Begin by going to your Luminati Dashboard and clicking ‘create a zone’.

- Choose ‘Network type’ and click save.

- Within Puppeteer fill in the ‘Proxy IP:Port’ in the ‘proxy-server’ value, for example, zproxy.lum-superproxy.io:22225.

- Under ‘page.authenticate’ input your Luminati account ID and proxy zone name in the ‘username’ value, for example: lum-customer-CUSTOMER-zone-YOURZONE and your zone password found in the zone settings.

For example:

const puppeteer = require(‘puppeteer’);

(async () => {

const browser = await puppeteer.launch({

headless: false,

args: [‘–proxy-server=zproxy.lum-superproxy.io:22225’]

});

const page = await browser.newPage();

await page.authenticate({

username: ‘lum-customer-USERNAME-zone-YOURZONE’,

password: ‘PASSWORD’

});

await page.goto(‘http://lumtest.com/myip.json’);

await page.screenshot({path: ‘example.png’});

await browser.close();

})();

How to connect Puppeteer with Luminati’s Proxy Manager

- Create a zone with the network, IP type and number of IPs you wish to use.

- Install the Luminati Proxy Manager.

- Click ‘add new proxy’ and choose the zone and settings you require, click ‘save’.

- In Puppeteer under the ‘proxy-server’ input your local IP and proxy manager port (i.e. 127.0.0.1:24000)

- The local host IP is 127.0.0.1

- The port created in the Luminati Proxy Manager is 24XXX, for example, 24000

- Leave the username and password values empty, as the Luminati Proxy Manager has already been authenticated with the Super Proxy.

For example:

const puppeteer = require(‘puppeteer’);

(async () => {

const browser = await puppeteer.launch({

headless: false,

args: [‘–proxy-server=127.0.0.1:24000’]

});

const page = await browser.newPage();

await page.authenticate();

await page.goto(‘http://lumtest.com/myip.json’);

await page.screenshot({path: ‘example.png’});

await browser.close();

})();

Using the headless browser Puppeteer, in tandem with the Luminati proxy service will allow you to automate your operations with ease.

By combining the two you can manipulate every request sent to see how the site/application will respond. This allows for the most accurate web data extraction and a true look into the user experience of applications that require testing.

Other Prxoy Providers Recommend

Nimbleway for Puppeteer

Nimbleway is another provider I will recommend. This proxy network is recommended here as the best Puppeteer proxy, especially for enterprise usage. Whether you need proxies for large-scale web scraping or some automation-based session management tasks, their proxies are fit for that. It has a large pool of IP addresses for your usage. This network supports IP auto-rotation but goes further than just what is obtainable from the other providers.

For Nimble, an AI-optimization engine is used to choose the best IP address for your target for each request to increase the chances of your requests succeeding. While it comes as a rotating proxy, it is one of the best in terms of session management as it gives you control of your session management, giving you the ability to maintain a session for a long period of time. Also important is the fact that it is one of the fastest residential proxies on the market.

However, it is one of the most expensive providers out there. You need a minimum of $600 to get started with this service and that gives you 75GB. For this service, KYC is compulsory and there is a 22GB free trial for enterprise users after a successful KYC.

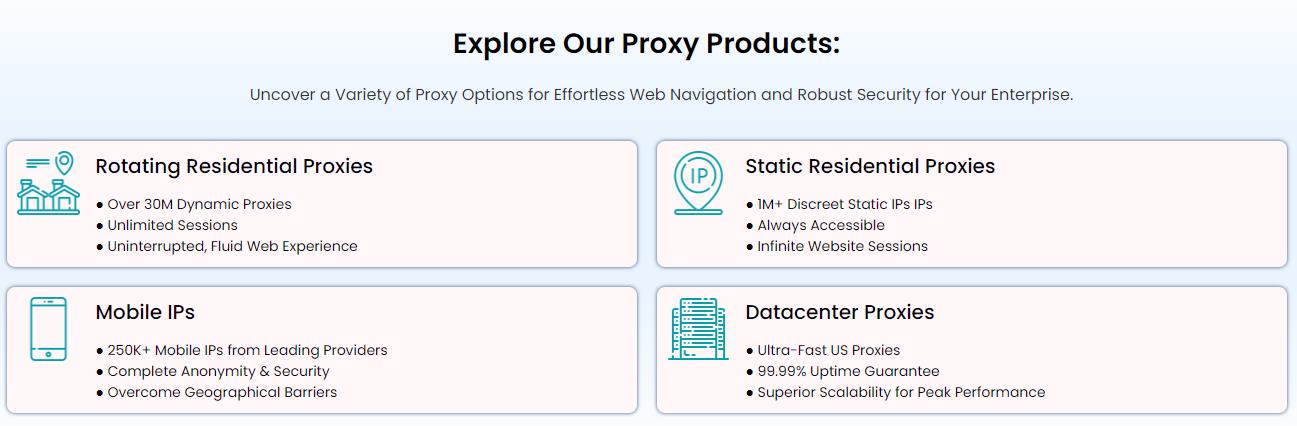

NetNut for Puppeteer

NetNut is also a great option for powering your Puppeteer data and web scraping tool. It also offers more or less of the same features as our other recommendation. It comes with over 52 million residential IPs, with a respectable 1 million of those being static and private IPs. You can also do things like country and city-level geo-targeting, it has several products and proxy types available in over 200 countries.

NetNut also has a very comprehensive dashboard where you can monitor your activities on the proxy network. When you combine that with the native analytics windows that come with Puppeteer, you have an unbeatable combination already.

API design is also a strong point of the NetNut package. Integrating their API into your existing Puppeteer setup is seamless and painless. Automation with Puppeteer and other excellent web scraping tools is also highly supported and encouraged.

Perhaps, where NetNut pulls apart is the number assigning of a dedicated account manager to help sort out stuff if run into difficulties with their platform. This shouldn’t be overlooked. Techie things don’t always work as one may expect, so an experienced account manager can come in handy when you least expect it.

When it comes to pricing for the proxies, we like their 6-level plan structure. It gives businesses and companies the ability to maneuver around their needs and budget quite easily. You can also make use of the generous 7-day free trial to measure how the service performs to your expectation, specifically when paired with Puppeteer.

Hello

I need some help. Where I can get residencial private proxy at low cost ??

I need Verizon, At&T, Spectrum, Comcast proxy.. Please Help me finding those proxies…

Thank you..

Suggest you try soax.io